Product Hunt — The best new products, every day

shared a link post in group #Product Hunt

www.producthunt.com

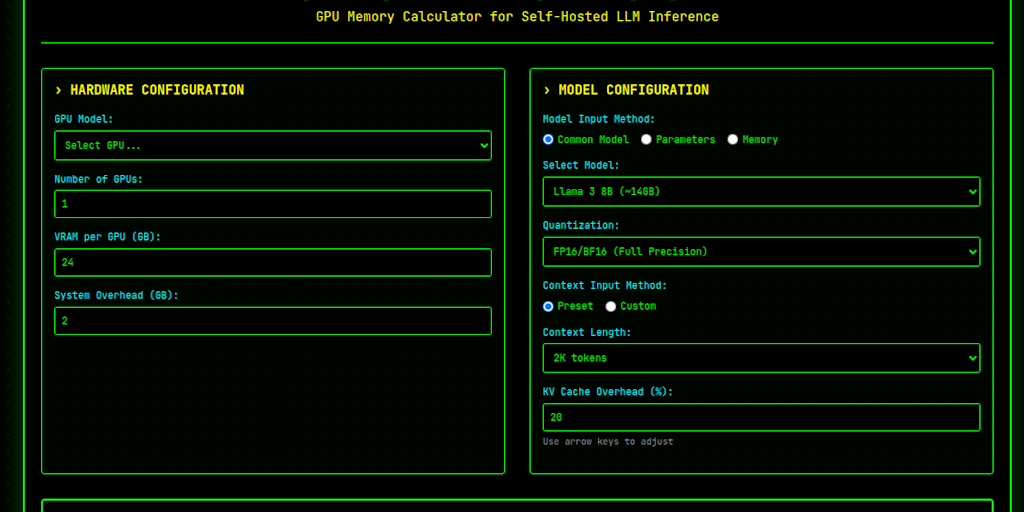

SelfHostLLM: Calculate the GPU memory you need for LLM inference | Product Hunt

Calculate GPU memory requirements and max concurrent requests for self-hosted LLM inference. Support for Llama, Qwen, DeepSeek, Mistral and more. Plan your AI infrastructure efficiently.